Abstract

Benefits

Optimised for Parallel Computing

- We can calculate pixels in batches or parallel

- Allow training Deep Learning Models that perform tons Matrix Multiplication on dataset

Cons

Hard to program

- That is why Nvidia’s CUDA Toolkit is good news. It allows us to program GPU, giving that flexibility into GPU

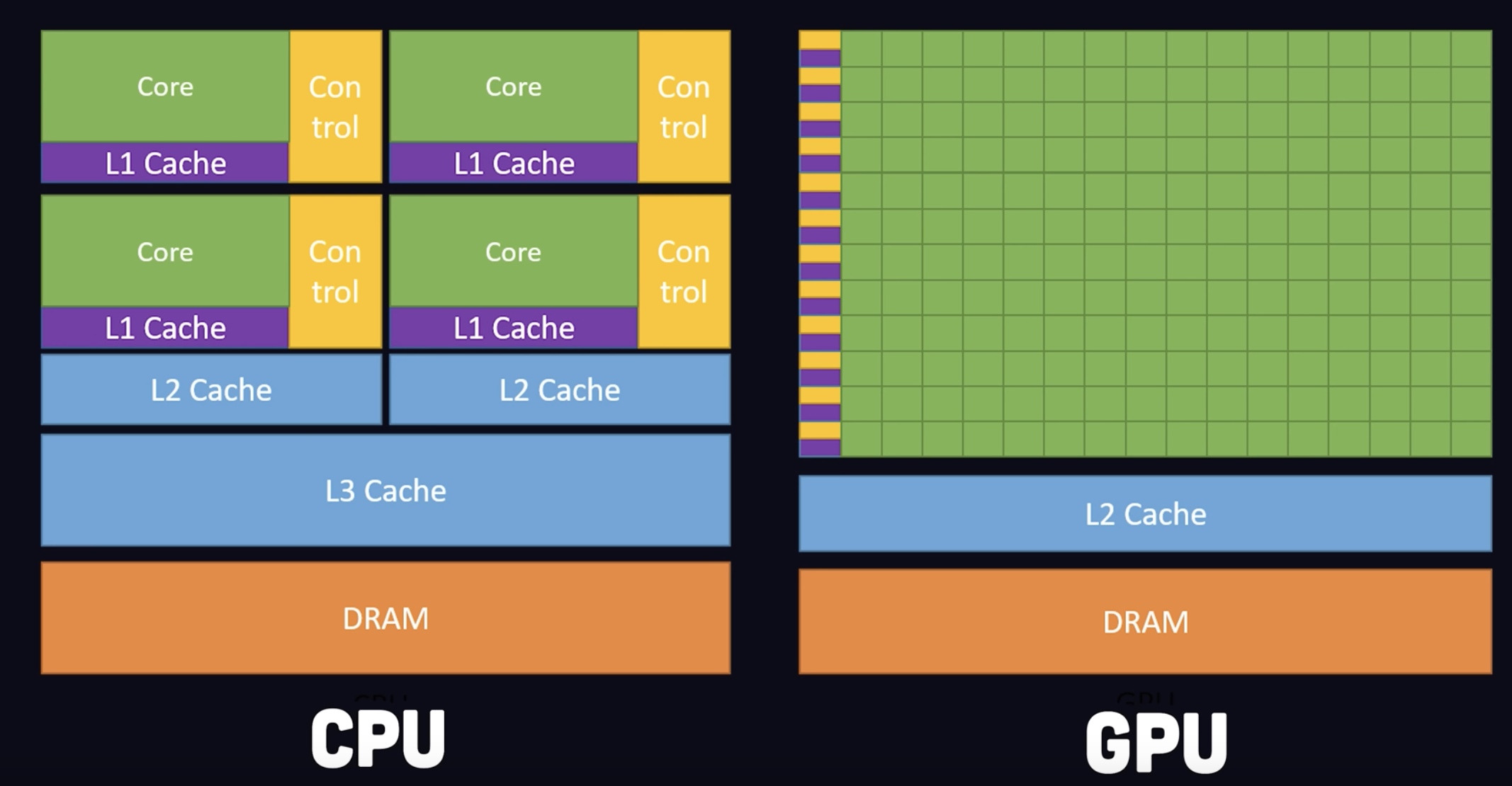

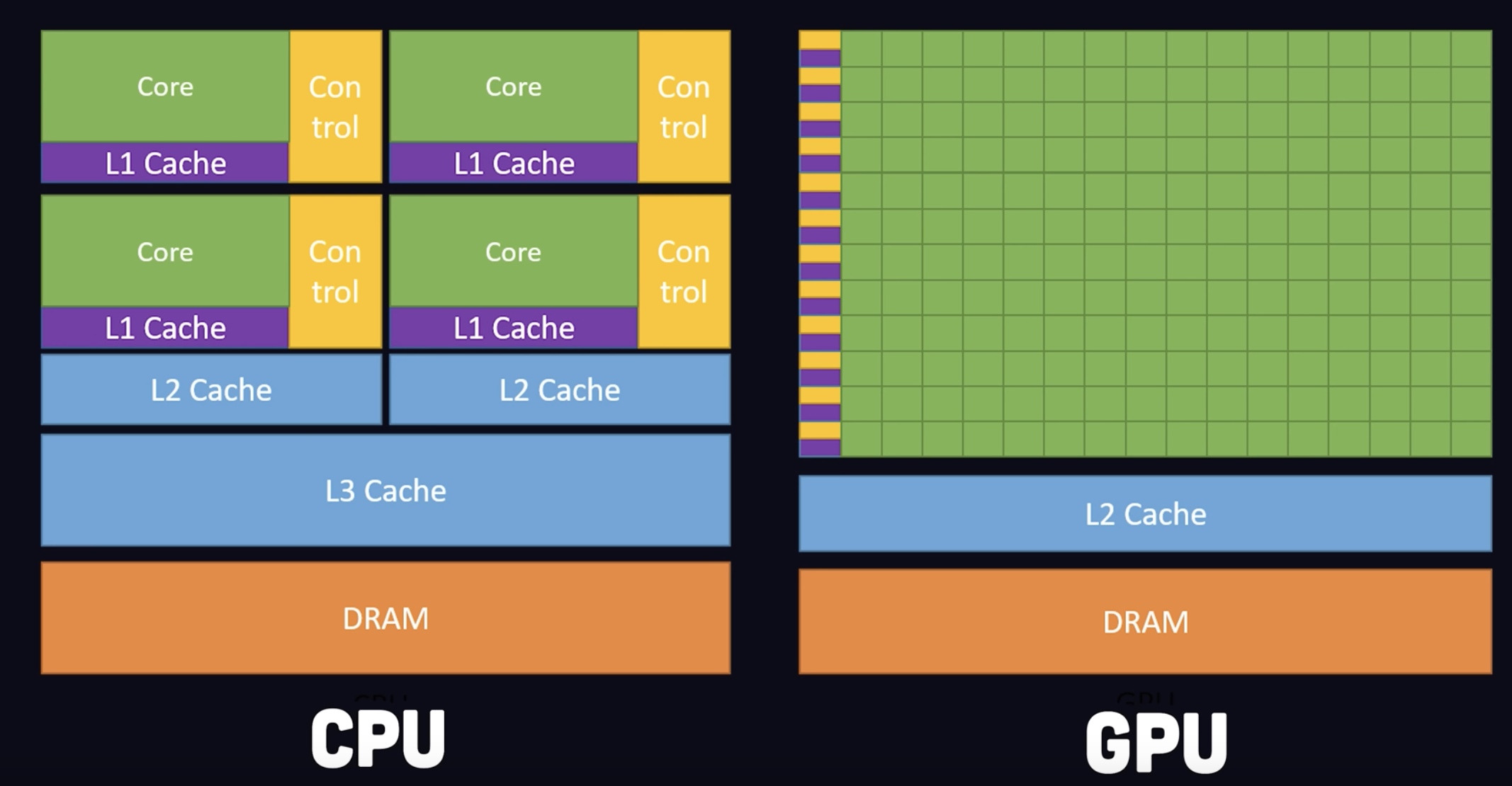

Comparison with CPU

- CPU one core is way more power, and able to handle complication logics like Branching

- However, a lot of the real world application needs to run Instruction in a sequential manner

- GPU shines when we need Parallelism (并行性)